Our quantum architect, Mark Webber, explains why quantum error correction is key to creating “useful” quantum computers and why we need millions of qubits.

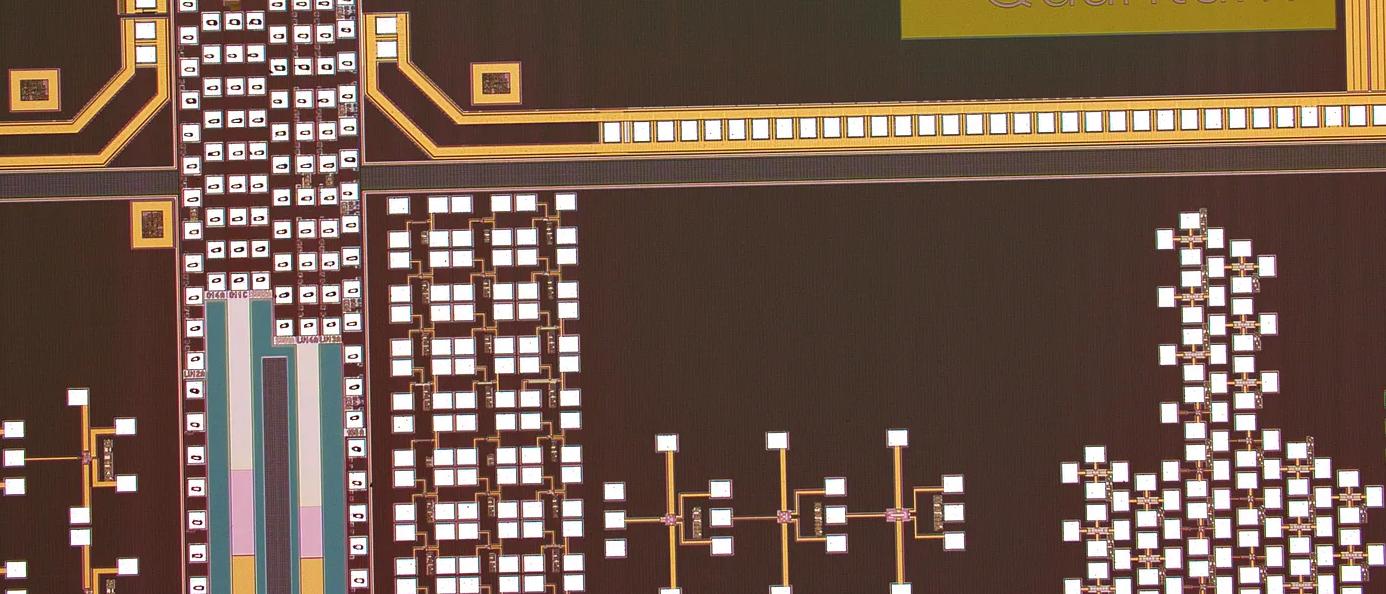

Universal Quantum’s devices on silicon wafers

Mistakes happen, and in quantum computing, you can’t avoid them. This is because, by their very nature, qubits are error-prone and difficult to control. As a result, quantum computers are unstable, complex systems and addressing these errors is key.

Fortunately, something called quantum error correction exists, which is a type of algorithm that corrects the errors. Quantum error correction involves a large overhead of additional physical qubits to encode a single logical qubit. Potentially, we need millions of qubits to make a powerful error-corrected quantum computer.

No qubit is an island

Qubits are incredibly fragile and prone to noise. The slightest environmental change in temperature or pressure, for example, can disrupt the quantum information. Each type of hardware varies in how long they can keep their qubits protected. For superconducting devices, it is in the order of milliseconds at best, whereas trapped ion devices can remain protected for multiple seconds.

For quantum computers to work, we must find ways to keep the quantum information protected for long enough to run powerful quantum algorithms, which may take hours or even longer.

It’s a complicated situation though because these methods must detect and correct errors. The required measurement operations must also be chosen with care because they can collapse the qubit’s state, potentially destroying important quantum information.

In 1995, a proof was published by MIT Professor Peter Shor that proved quantum error-correcting codes exist to overcome this issue.

Then, a further proof was published demonstrating that these codes could theoretically push error rates to zero. This work (with many other publications in the field) essentially proved that high impact quantum computing with non-perfect qubits is indeed possible.

The quantum computing community is now working out how to realise this ambition at both the software and hardware level — and we’re getting closer.

The state of quantum error correction

For quantum error correction to work, the information stored in a single qubit is distributed across other supporting qubits.

These supporting qubits are called “physical” qubits and together they form a “logical” qubit. The quantum logical information is distributed across many physical qubits in such a way that errors can be detected and corrected.

When a physical error occurs, it is detected by repeatedly checking certain properties of the qubits. Then, a correction operation can be applied to the physical qubits, preventing any errors from occurring at the logical level.

Depending on the nature of the hardware you use and the type of algorithm you choose to run, you may need hundreds or thousands of physical qubits to support a single logical qubit.

This doesn’t guarantee the protection of the logical qubit either. Logical errors can still occur if a group of physical qubits experience an error together. However, this error rate exponentially decreases with the addition of more physical qubits.

One logical qubit is not enough to complete complex calculations to do something useful for society. We will likely need hundreds or (maybe) thousands of logical qubits, each one supported by hundreds or thousands of physical qubits. This is where the requirement for millions of qubits comes from.

The base physical error rate must get below a certain critical value for the error correction protocol to actually be worth the effort of encoding. Once this point is reached, every further improvement to the physical gate fidelities decreases the number of physical qubits that are required per logical qubit.

The efficiency of the error correction protocol can also be increased by making use of the physical connectivity of the underlying hardware. At Universal Quantum, we have a high-fidelity method for enabling full connectivity between qubits that involves physically shuttling ions around the device. This essentially “frees” the qubits to move around the computer and “talk” to one another.

This may mean that we can use a different error correction protocol that is more efficient than the standard surface code. This standard surface code is well suited to superconducting devices because they only rely on nearest neighbour interactions (where a qubit is “stuck” in a defined position and can only “talk” to its neighbour qubits).

But research into quantum error correction has made great strides forward since the late 1990s. A range of mathematical tricks now exists that relax the associated overheads or enable computations on logical qubits to be conducted more easily, without interfering with the computations being performed.

Are we nearly there yet?

Without error correction, the answers that a quantum computer spits out could be nonsense. But the path remains a long one. To do complex calculations, we must dramatically scale up the number of high-quality qubits.

That’s a problem Universal Quantum has focused on from day one, using six technology pillars to create a quantum computer that truly scales. You can find out more about our work at www.universalquantum.com.